Anyone who’s followed Freetrade’s progress since our launch will be aware that the majority of our business logic is handled by Google Cloud Functions, after scrapping initial complex Kubernetes projects in favour of the scaling offered by serverless functions.

When it came to building our Invest by Freetrade platform, with a year’s serverless experience under our belt, we made the decision to continue with serverless for the new platform. With the knowledge that Invest would need to scale far beyond our customer numbers at the time, we knew the scalability benefits would be invaluable.

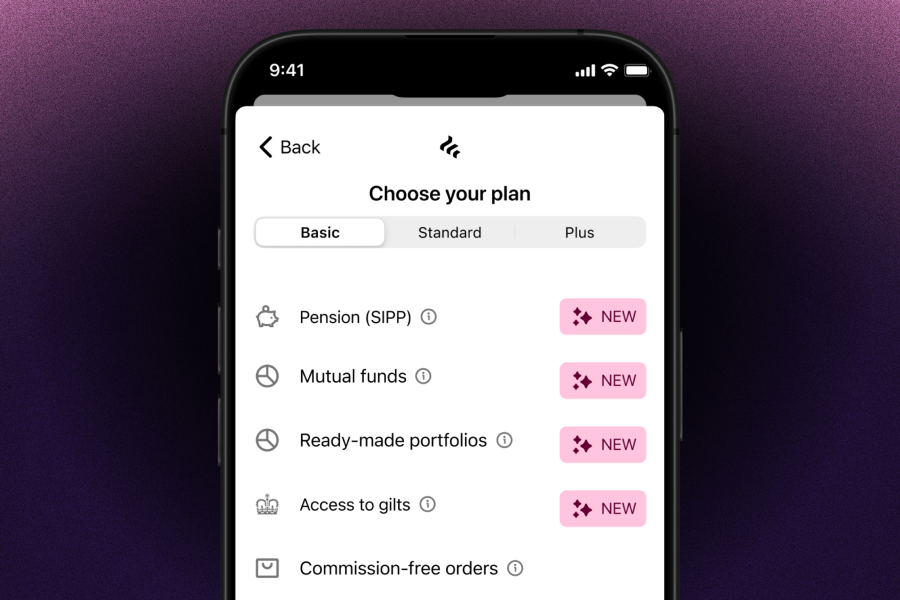

That decision, along with our move to adopt the then-beta Cloud Firestore as our database of choice, has proven itself repeatedly. The platform now supports over 250,000 users — an almost five-fold increase compared to when we started building Invest. But, as with any project built to scale, it has not been without its problems.

Context - Cold Starts

If you’re not familiar with Google Cloud Functions, they’re similar to most functions-as-a-service / serverless offerings — anyone that’s used AWS Lambdas or Azure Functions will be familiar with the concepts involved.

For those who haven't, with functions-as-a-service, rather than reserving dedicated computing resources on a monthly basis, you pay only for resources for the time used to execute a function.

One downside of this is that behind the scenes, automatic scaling up and down happens regularly as part of the cloud providers management which can lead to side effects when Google has to spin up a new container for a new request.

This extra process takes longer than handling the request with an active container, which leads to a performance hit on the first request - this is known as a cold start. With a cold start often meaning a 10 or 20 second performance hit on the first request, we try and avoid them wherever possible.

Step 1: Don’t cause extra cold starts

Within software engineering, there are a few transient errors you can expect to crop up — whether it’s network failures or transient Google Cloud failures, these errors are expected to happen at least occasionally during BAU conditions.

Each of these failures, if not handled, will lead to Google recycling the container used for the function, which in turn leads to a cold start. This makes a lot of sense from Google’s side.

A fresh container often solves a lot of transient issues. But if we allow this to happen for all scenarios we end up with a lot of unnecessary cold starts. In the scenario of a hard failure, this will also have a knock-on effect as Google starts to throttle the number of instances in a function that fails often.

The first — and most basic — tool in our arsenal is the humble retry. We use retries extensively at pretty much every level within our systems.

The most basic form of these is in-process retries wrapped around network calls or transactional writes that we expect to fail on occasion. By having automatic retries around these sections of logic, when an error is thrown, the handler automatically retries the operation (with configurable backoff and number of attempts), usually succeeding. This has drastically reduced the number of errors which bubble up to the global scope in our Cloud Functions, reducing our number of unnecessary cold-starts.

const command = new RequestFundsWithdrawalClientAccountCommand(req.params.accountId, payload)

await executeWithRetry(

async () => {

await dispatchCommand(

command,

{ ...new DefaultCommandContext(), idempotencyKey: payload.withdrawalId },

createDispatchContextFromRequest(req)

)

}, // async () => Promise<t></t>

10,// max attempts

100// wait factor (ms)

)

We also make heavy use of Google’s retry-on-failure option for most of our Cloud Functions. For event-triggered functions, Google guarantees “one or more” executions with retries enabled, with a scaling backoff that starts at multiple retries in a second, but will keep retrying for multiple days. This means that if one part of our system goes down, even for hours, the functions will retry and execute successfully once recovered. It also means that container-level failures are still handled automatically, after the in-process retries fail.

The eagle-eyed may have noticed this in the code sample above that usage of retries also forces us to adopt better programming patterns and ensure that each unique action is “idempotent”. This essentially means, we only process it once, regardless of the number of attempts — otherwise retries or double-fires on a cloud function could mean someone’s deposit or withdrawal being processed twice.

We also learnt the hard way that we have to be careful with Google’s built in retries, as a hard failure that loops infinitely can be costly. While the financial implications are clear, performance will also suffer due to the repeated cold-starts. To this end, we ended up writing a wrapper around the Cloud Functions SDK methods for handling Pubsub / Event triggers. We use this to limit time-sensitive retries, as well as reporting success/failure rate metrics which helps to simplify paging engineers.

Step 2: Keep functions warmer, longer

Some of our most complex functions, such as placing orders, are also part of our core user experience. Because of their complexity a cold-start in one of our core order functions can double or even triple the time taken to execute a user’s order which becomes very noticeable when the user is waiting in-app.

For these functions, we have another scheduled function we call the “function warmer”. This function essentially runs every few minutes and sends a request to our high-priority functions thus ensuring they are kept warm by Google.

Step 3: Minimize the impact when cold starts do happen

As a final step, we’re also in the process of migrating all our functions to a new and improved paradigm we’ve nicknamed ‘monofunctions’ - a combination of “monorepo” and “functions”. This is the monorepo paradigm commonly used with microservices with the addition of Lerna and Rollup.js to support building serverless functions.

While this requires a lot of effort to maintain, as every shared piece of logic or code has to be refactored out into discrete packages, the end result is that each individual function has a much smaller resource footprint, and thus cold-starts are significantly less painful. This migration also gives us faster and more controlled deployments. Everything is handled via Terraform, which means more consistent deployments and automatic separation of service accounts for different functions.

Do you use serverless or want to work with it? We’re hiring for a bunch of engineering roles at Freetrade. Check out our careers page to apply.

This should not be read as personal investment advice and individual investors should make their own decisions or seek independent advice. This article has not been prepared in accordance with legal requirements designed to promote the independence of investment research and is considered a marketing communication.When you invest, your capital is at risk. The value of your portfolio can go down as well as up and you may get back less than you invest. Past performance is not a reliable indicator of future results.Freetrade is a trading name of Freetrade Limited, which is a member firm of the London Stock Exchange and is authorised and regulated by the Financial Conduct Authority. Registered in England and Wales (no. 09797821).