Introduction

This post is intended as an introduction into how our Data function operates here at Freetrade.

Today, we’ll start with a whistle-stop tour of our infrastructure and attempt to shed some light on what goes into telling the story of Freetrade for our management, investors and customers alike.

At the heart of everything we do is our Data Warehouse. We use it to track our growth, measure our KPIs, produce insights for our product and marketing teams and to assist the rest of the engineering team in their development activities.

And as we now have over 300,000 customers, that’s a lot of data.

In the following sections you’ll see how we leverage serverless infrastructure and managed solutions like BigQuery, Cloud Functions, Terraform and Looker to develop a Data Warehouse that can scale to match the phenomenal growth of Freetrade.

BigQuery

At the heart of everything that we do right now is BigQuery. BigQuery is a managed Data Warehouse solution provided by Google. Like the rest of our techstack, it’s a serverless platform. It allows you to run SQL queries, executed through the BigQuery UI or through one of their many support APIs.

We’re not going into much detail on BigQuery other than to say it’s awesome.

You can churn through terabytes of data in minutes. Queries that in previous professional lives would require spinning up a dedicated Hadoop cluster, or through cloning a relational database instance, can be answered in a few minutes after drafting a query in your web browser. Since its launch it has been a real game changer for Data Warehousing teams across the world.

As such, BigQuery is our primary repository for data. Almost all the data that we produce as a company gets ingested into it and currently, in Data, all of our data products are driven off the back of queries to the BigQuery API. That may change in the future, but so far BigQuery has addressed most of the requirements we have had as a rapidly growing company.

The difficulty of course with any Data Warehouse solution is not the Data Warehouse itself, but rather in all the ancillary work that goes into ingesting and then surfacing all the fruits of your data modelling and analysis to stakeholders.

Ingestion

Our backend infrastructure, built largely on top of Firebase and Google Cloud Functions, has two primary data stores in use, Cloud Firestore and Firebase Realtime Database.

Both of these data stores are document oriented databases and so not very suited to the performance of large scale aggregation and analytics. So the first step in being able to measure our success here at Freetrade is in ingesting the data produced by our applications into a datastore more suited for analysis.

Cloud Functions

You might have seen the previous posts discussing our serverless infrastructure for our Apps Backend and our Invest Platform. For the Data Warehouse, we leverage the same approach when it comes to the ingestion of data.

To do this, we take advantage of the Cloud Firestore Triggers and Firebase Realtime Database Triggers APIs. For every document type in our databases we create an instance of a Cloud Function whose purpose is to replicate the current state of that document into our data warehouse whenever it changes. This replication is performed using the BigQuery REST API.

And that’s it. There’s been a new document created? Write it to BigQuery. There’s been a change to the document? Write it again. It’s a simple approach that gives us flexibility. Depending on our analysis needs, we can either refer to the latest version of the document or rebuild its entire history. The only downside is the additional cost of storage.

These functions get deployed and maintained in the same manner as the rest of our cloud functions, making them easy for anyone across the engineering function to maintain or enhance.

On occasion, we’ve also had to ingest data from a third party via a REST API or similar.

When this happens, we largely take the same approach of writing a dedicated Cloud Function or two. The only difference is:

- Rather than writing that function in Typescript, we’ll author it in Python to take advantage of the DataFrames BigQuery API for streaming data into BigQuery.

- We’ll generally trigger those functions on a schedule; for which we’ll implement them as HTTP functions and trigger them with Cloud Scheduler.

Natively Supported BigQuery Services

Of course, we rely on other sources of data than just our backend databases, and for that we make heavy use of BigQuery’s Data Transfer Service to ingest Data from Cloud Storage or Amazon’s S3. This makes the ingestion of flat data like CSVs or Avro files simply a matter of configuration.

We also make use of Scheduled Queries. This allows us to ingest things like data from external databases (like Postgres) using BigQuery’s Ferated Queries functionality, or from other Google data sources like Sheets which we ingest to facilitate KPI reporting on our business processes.

The advantage of using BigQuery’s native ingestion is that it can be very quickly and easily configured through the BigQuery UI. However, this obviously isn’t a scalable approach, which is why most of our ingestion activities are configured through Terraform, which we’ll discuss in more detail later in the post.

Modelling

Here at Freetrade we follow the ELT pattern for integrating Data in our Warehouse. We apply most of our data transformations and enrichment directly in BigQuery. Another post will detail our approach to how we model and transform our data; for now let’s focus on the infrastructure and tooling. And that’s DBT.

DBT

DBT (Data Build Tool) was originally a tool to manage the transformation of data in the Data Warehouse. What it essentially provides is a way to write code (using a mix of jinja and SQL) against your data models and then a mechanism to compile and execute said code against your database.

We’re great fans of DBT here at Freetrade. Most of our data models are specified in DBT; we make heavy use in particular of its functionality for partitioning in BigQuery and incremental models. We leverage jinja to paginate queries when using federated queries so we don’t overwhelm the external data source. We leverage DBT Cloud to schedule and execute our transformation jobs.

And we leverage DBT Cloud again for our alerting through its Slack integration.

Visualisation

Often the quickest and easiest way to surface the answer to specific questions of our Data Warehouse is through BigQuery’s integration with Google Sheets, and in general it’s something we make extensive use of here at Freetrade, especially when it comes to supporting our engineers and operations teams to resolve specific issues.

But in general, when it comes to surfacing data to our stakeholders, we take one of two approaches: Data Studio or DBT.

Data Studio

Data studio is quick and easy to get up and running with. Specify a table in BigQuery as a data source then quickly start putting together a report with filters and charts all through their WYSIWYG editor.

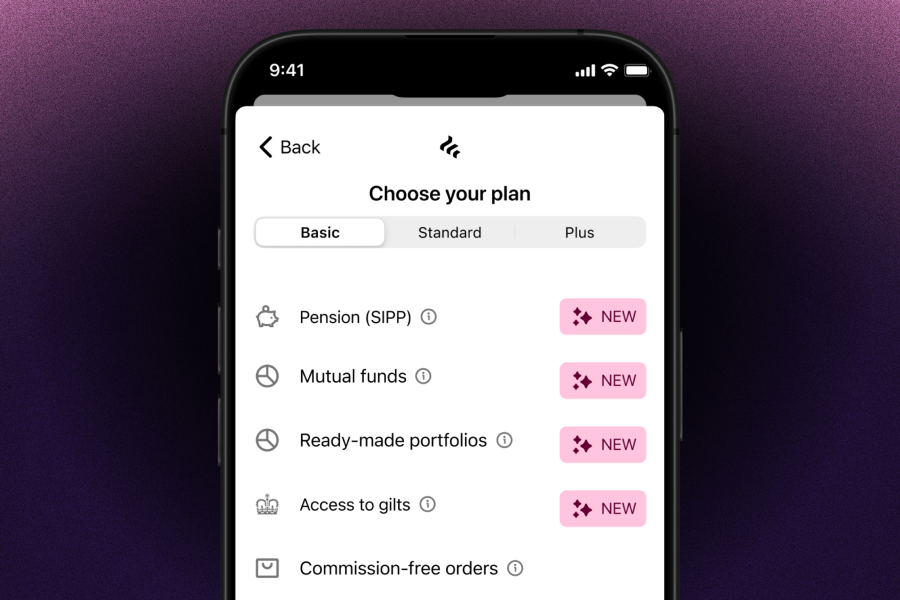

We tend to leverage Data Studio to put together our basic operational dashboards; searching particular clients, finding a certain invoice; that type of thing. Generally speaking, when a new feature launches at Freetrade, in as little as a few minutes we can have a new dashboard up and running enabling our operations team to support it.

Data Studio is not without its limitations however. Its ease of use can often be its own undoing; when the underlying models change it can be hard to understand the impact it might have on our dashboarding and large aggregations spanning multiple datasets can be time-consuming to implement. That’s when a more programmatic approach becomes beneficial, and for that we use Looker.

Looker

Looker is used for much higher level data aggregations. What’s the average value of our customer’s portfolios? How many signed up to Plus in the past month? Looker is fantastic at answering those kinds of questions and indeed most of our Business Intelligence activities are facilitated by Looker.

That’s largely implemented through the power of LookML; we make extensive use of LookML both to abstract our data models in BigQuery and also to author in code our business critical dashboards. Its validation tool is extremely useful in helping us to understand the impact of changes to our data models on our stakeholders in the context of how they consume the data.

Tying it all Together

Terraform

Terraform is used to manage all our infrastructure in Data currently. It’s used to deploy and schedule the invocation of our cloud functions, specify our scheduled queries, manage our secrets, define our data transfer jobs from Cloud Storage and S3, and even to define certain schemas that are used for ingestion into the data warehouse.

Adopting Terraform has been a game changer for us at Freetrade. In the early days of the Warehouse, our focus on speed and agility meant that we would configure many ingestions and transformations directly through the BigQuery UI. But overtime, those manual configurations became difficult to maintain and search for.

Now, all of our infrastructure is specified in one place and rolling out a new environment for testing purposes is a matter of executing a handful of commands at the CLI. In fact, once you become familiar with Terraform, and have codified your common patterns in modules, configuring a new ingestion or transformation job becomes much quicker than fiddling with all those dropdowns and text inputs in the UI. You’ll never look back.

Final Thoughts

This has been a whistle stop tour of Data’s infrastructure and tooling at Freetrade currently. We can’t say it’s been an entirely smooth journey to arrive at this point; there were some hard lessons learned along the way but the flexibility and scalability afforded by our technology choices have made the journey to this point much easier than it might otherwise have been.

Future posts will go into more detail on the approaches we take to modelling that data and how we organise the data function at Freetrade. But hopefully this has given you an indication of the exciting work we do here in the Data function at Freetrade.

P.S. did we mention we're hiring?